Generating videos programatically can be useful nowadays, with increasingly popular platforms like YouTube, Instagram, or TikTok. I want to share a cheap, yet powerful method for generating videos from frames, using FaaS (Function as a Service) products and simple in-browser JavaScript. I’ve been using this technique several years ago to generate hundreds of custom videos per week that were later uploaded to YouTube automatically for broader audience reach.

Given the recent abundance of new JavaScript libraries and frameworks, I can see this method being even more potent and relevant now than it was when I’ve originally used it. Let’s dive in!

Overview

Let’s say we want to generate a 1-2 minute custom video, based on parameters supplied by data provided by our users.

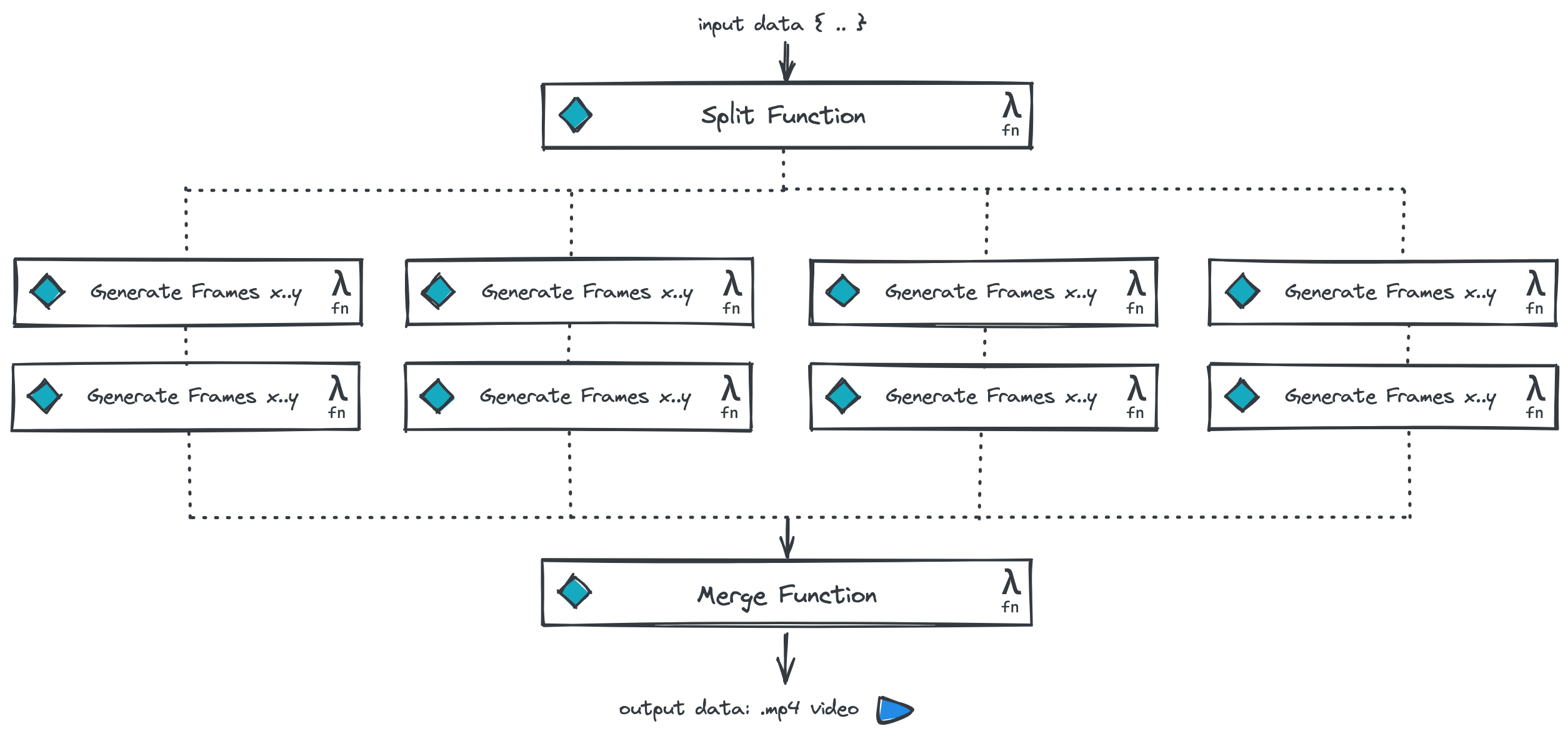

Here’s an overview of the process, consisting of 3 main steps (functions). Later on, I’ll dive into each individual step to explain it in more detail.

- Trigger the first Split function using an API call, providing all the necessary input data for the generated video.

- The “Split” function triggers

nGenerate Frames functions (8 on this diagram). - Finally, Merge function ties everything together and produces the final video container.

JavaScript frontend animation engine

With the browser as our main video generation engine, we need a JavaScript animation/graphics framework. I’ve used a random slideshow generation library, but the possibilities are endless here. Just to list a few good options:

My need at the time was generating a nice and slow videos displaying photos animated with ken burns effect (example). Each video represented a real estate property for sale, along with the seller’s information. Think what you need and how or even if it is achievable in JavaScript. While JS animation and graphical capabilities became powerful in the recent years, it’s not yet capable of creating rich animation experience like dedicated desktop software (e.g. Adobe After Effects).

Important: regardless of the JS solution you choose, it must have the ability to control animation/video frames and play/pause the animation. We’ll be splitting the generation work for

nfunctions to speed up the whole process using parallelisation, and without the capability to render any millisecond of the animation, this method will be hard to realise.

The process

Split function

First, we need to trigger the process. Along we pass unique data used later for generating our video. This allows us to generate exclusive, custom videos at scale.

In my example, I’ve passed the property and brokerage’s information in a simple JSON file. This data is later passed on to the JS library generating the animation.

Aside from managing the data, the primary job of this Split function is figuring out how many functions to trigger and delegating video frames generation to these functions.

Let’s assume we generate a 24 FPS video for a traditional, cinematic experience (feel free to tweak it to 30, 60, or even 120 FPS). The video length itself might be dynamic and dependent on the input data. If the video is 3 seconds long, it doesn’t make sense to spawn 20 AWS Lambda functions to generate 3 * 24 / 20 = 3,6 frames each. But if the video is 3 minutes long (180 seconds), then with 20 parallel functions, each one would generate 216 frames.

The n number of functions is up to your choice. The more functions in parallel, the shorter the whole process becomes. The beauty of this approach is that you pay the same for 100 parallel functions and for 1 function doing the same work. What matters here is the compute time, which should remain the same (unless you have a resource intensive boostrap process for your animation engine). It’s even possible to make the n number dynamic, depending on the requested video length, complexity, or other input parameters.

Here, let’s assume we spawn 8 parallel functions.

The Split function triggers 8 functions in parallel, passing all the input data, along with the calculated time markers:

- Video start time (milliseconds)

- Video end time (milliseconds)

- FPS

These parameters are all the Generate Frames function needs to produce the requested frames for the subset of the video.

Generate Frames function

This function is more complicated; it spawns the browser, launches the animation engine, and manipulates it to produce the desired output (via browser API).

Its workflow looks like this:

- Run browser (I recommend Chromium)

- Run JS application with the animation (providing the input data making the animation custom and unique)

- Operate the animation using the library/framework API controls to produce screenshots

- Start at the

videoStartTime - Pause the animation

- Do a screenshot & save

- Scrobble the animation (

setTime) tovideoStartTime + idx/FPS - If we reached the

videoEndTime, stop the program - If not, do a screenshot & save

- Start at the

- After we’ve done all the screenshots, save them in the cloud along with the screenshots from other parallel functions (I’ve used AWS S3). Use ordered numbers for file names to help order frames into a full video.

Hint: use a high resolution for the original generated video. You can always scale the video down for other formats (e.g. using AWS Elastic Transcoder).

Merge function

At this point we should have a directory with all video frames ready to be collated into a full video. I’ve used ffmpeg – the Swiss Army knife when it comes to video.

I won’t get into details of the exact ffmpeg command parameters to use as it’s going to be different depending on your objectives, codecs you want to use, and other factors. Please follow the official documentation for this. Example:

ffmpeg -r 1/5 -i img%03d.png -c:v libx264 -vf fps=25 -pix_fmt yuv420p out.mp4And there’s the video! But there’s something missing..

Adding audio

Let’s add sound to the video 🎶!

How can we add sound to an existing video? Yup. We’ll use ffmpeg again. As above, I recommend you read the official docs to understand what how ffmpeg creates the final video file container from multiple video and/or audio streams. Here’s a quick example:

ffmpeg -i input.mp4 -i input.mp3 -c copy -map 0:v:0 -map 1:a:0 output.mp4That’s it! I wasn’t convinced to use this method at first. Having experience with automating work inside a browser (mainly for automatic tests), I’ve experienced many quirks and unreliability issues. I couldn’t believe that a browser could be trusted to, say, not skip a frame or produce a different issue. But the truth is that it works marvellously. The key part is having the ability to scrobble the animation at the millisecond level. The browser API is mature and reliable at this point (see puppeteer) and I had few issues using this method (almost no maintenance or issues at all).

Technical considerations & summary

It’s been a while since I’ve done this and that’s the primary reason I’m omitting a lot of low-level details of the setup. But I’ve decided to share this regardless – the core idea here is more important than the specific tools and infrastructure used. If anything, there’s an even broader choice of technologies available today to achieve similar results.

For frontend/animation, I can imagine using React.js, Three.js, or any other powerful animation or frontend framework.

Infrastructure-wise, one could use AWS Lambda (as I have), but there’s now many players in this space. Microsoft Azure, Google Cloud, IBM Cloud Functions, OpenShift Cloud Functions – these are the big names right now. For the more independent, cloud-native approach, OpenFaaS could be used (enables creating your own FaaS on Kubernetes).

One last thing worth mentioning is that this approach is tremendously cost-efficient. We’re generating videos on-demand, without maintaining any long-running infrastructure, paying just for the compute time used. Keep in mind that generating 1 second video this way does not take 1 second. The function has to generate 24 frames and save the screenshots, which should take something closer to milliseconds on any decent modern hardware.

If you would like to learn more about this project, feel free to contact me 🤙. I’d be happy to help set it up for you both in terms of the code or required cloud infrastructure.